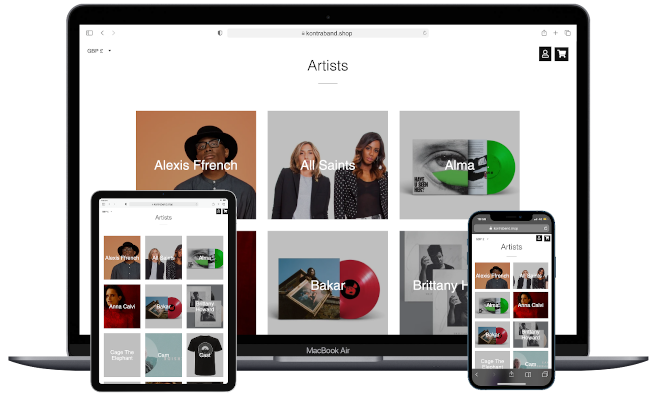

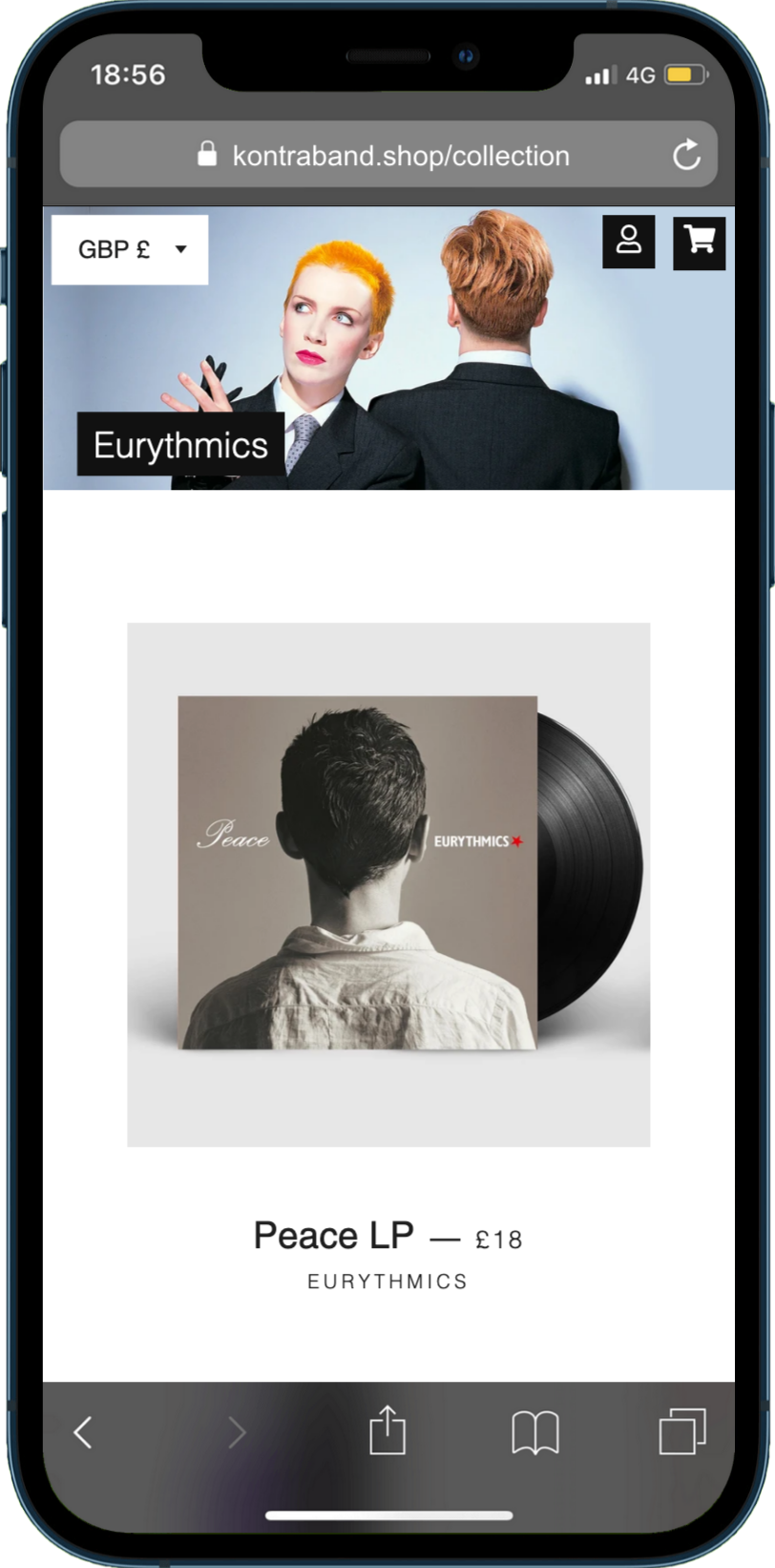

Multi-Store Sony Music UK merchandising company

ALL YOUR DEVELOPMENT NEEDS

MAGENTO 2 MIGRATION | INFRASTRUCTURE DEPLOYMENT & MULTITIERED AUTOMATION

Kontraband (KB) operates is a London based music merchandising company, it was acquired by Sony Music UK in Sep 2019. KB has existed on the market for 20 years selling merchandise for big brands like Agnes Obel, Anna Kalvi, Archive, Bjork, Black Grape, 50 Cent, Iggy Pop and many others.

Location

United Kingdom

Sector

Consumer

Service

Web Development eCommerce & SLA

Background

Background Kontraband reached out to us as, they were having issues during code deployments via their AWS infrastructure. different stores owned by individuals and brands.

AWS and Magento2 experts

After Wirehive the hosting provider set-up the AWS infrastructure and the deployment systems, external provider that understands how Magento2 works with AWS and this is where we came in. This obvious cause was due to neither understanding each others technology to be able to move their project forward.

Issue

The key issue here is having the understanding on how Magento interacts with AWS, even though Wirehive looked into the issues, they stated that it was an application issue and not a server issue. Paul Entwisle the Director of Kontraband was concerned that they may have not set-up AWS correctly for running the Application, they understand AWS – but not Magento; they felt stuck and turned to us to resolve their issues as this was having an impact on their business.

The problem breakdown

01. Code Deployment Time Reduction

Code updates were submitted frequently for the individual stores, they had been requested almost every hour. Deployment of fresh updates was initiated automatically based on time based auto scaling at 08:00 UTC on a daily basis but for some reason the auto deployment (aws codeDeploy) was timing out. Soon after auto scaling triggered creation of new EC2 instances, the store that was supposed to receive the update got the 501 server errors and to add further issues, this spread across to the other stores. Manual intervention on infrastructure level was required after every auto deployment.

02. Auto Scaling Issue

During the auto scaling process the system was attaching new instances to the production server; however, static content was not being deployed across all instances. Wirehive’s solution was to remove the static content from the auto deployment, forcing the KB team to deploy static content manually. If there were any spikes in traffic during the nights where the system needed to scale up (to add additional servers), nobody from KB would be in front of their computers to do the manual deployment (KB would skip using CodeDeploy and create a new instance via SSH and then deploy static content on server directly).

Solution

Setting up a good deployment strategy was crucial to their success. Through examination we noticed that Wirehive's auto deployment was based on default ‘quick’ Magento 2 deployment. Paul’s assumption that they didn’t understand Magento 2 was correct. They had been struggling with this issue for weeks, once granted access to the AWS console, we were able to do a stealthy fix.

Code Deployment Time Reduction

After doing a deep-dive of AWS codeBuild setup, we were able to identify the problem quickly: the compilation process was exceeding the timeout in codeDeploy (1hr) deployment was failing as part of the static content was being generated. Looking at the Kontraband stores, we could see a massive amount of static content needing to be deployed every time a new code gets released, even if it’s a small static code update via CSS, JavaScript or content update for SEO and Magento was triggering static content deployment across all stores.

Magento introduced ‘The Compact We Asset Generation Strategy in Version 2.2’, this deployment strategy was a key feature for Kontraband auto deployment setup. In order for us to switch to Compact Deployment we had to update ansible roles within AWS codeBuild. After switching to the Compact Strategy we managed to reduce deployment time that was averaging out 70 minutes to a new 15 minute average.

Paul noticed the difference and was incredibly impressed that he felt compelled to message us whilst travelling: “That looks really positive! Well done!! I’m on a train at the moment so can’t type more – but this looks like a massive improvement.” As always – I passed this onto my team and praised them for doing a great job.

Auto Scaling Issue

Whenever a new instance was attached to the system – media or static files (CSS, JavaScript) simply started disappearing from the store frontend. The auto-scale system was configured to do both: auto scaling on the system load and time based scaling cost reduction. It was configured to scale back the environments to a single server at 19:00UTC every day then grow out to Min 2 : Max 4 during the day at 08:30 UTC. S3 was used as storage for ansible roles and code deployment scripts.

Wirehive was trying to point out that the issue was also related to the application. Staging didn’t fail static content load as they stated, but they didn’t know that Magento2 runs in 2 different modes: on staging it runs in development mode and on live it runs in production mode. The most important difference is that static content doesn’t get deployed in development mode. CodeDeploy was running smoothly for the staging deployment group in AWS.

After we examined Magento2 installation and its auto deployment scripts, we noticed that the pub directory was shared across all stores – that seemed to be the problem. Every time a deployment of static content takes place in Magento2 the pub folder is automatically flushed. In this scenario of AWS auto scaling, we shouldn’t share the whole content of the pub directory among the instances. We had to edit the AWS codeBuild again, allowing pub/static to be set for each individual store - making pub/media as a shared resource. We initiated a quick stress test to the system to force auto-scaling and the update took place, neither product images nor the static content was missing from the frontend stores during scaling.

Results

After our successful reintegration of Kontraband’s Magento2 installation to AWS, Paul wanted to keep us on an SLA contract to support his business growth and any further AWS maintenance or Magento development task are passed directly to us. This has led onto us creating many migration strategies for several of Kontraband’s clients who require us to move from Magento1 to Magento2. So, expect another Kontraband case study to come soon.

Lets Start a Conversation

Do you have a project or collaboration that you would like to discuss with us or are you just curious to hear more about how we can help?

Connect with our ecommerce expert

Free Consultation

From the peg to the insta cool to max revenue growth 508%

MAGENTO DEVELOPMENT | SPEED OPTIMISATION | PROJECT CONSULTATION | UK

rebelliousfashion

Have a project in mind?

We are just a click away and can’t wait to hear from you.