The busiest time of the year – the forth quarter. It’s the best time to close a decent amount of sales by pushing high quality traffic to your Magento based e-commerce store and your servers. No matter where you plan to host make sure that your environment autoscales so you can prevent outages and breaks if traffic suddenly spikes. Speed optimization and fast page load isn’t only passing google insights and GTmetrix speed score.

Scaling is a feature that will be available in case you host your application on a cloud based infrastructure. It is possible to scale up or down if you run a dedicated host as well though in that case it has to be done manually (time consuming). Manually scaling can take often days even weeks since all has to be done physically by your engineers (hosting staff). That said always consider cloud if that is an option. It will save you money and it’s matter of minutes to deploy new web nodes and terminate these once you don’t need them anymore.

For example: you need more power for your web-server! You simple deploy another web-node or multiple nodes in a cloud environment by replicating your application to new logical entities. Then you split traffic to all nodes instead of driving all traffic to one server. This is a simple process for an experienced system admin or dev ops. You will see the advantages of this hosting strategy in minutes.

SCALING DIAGRAM

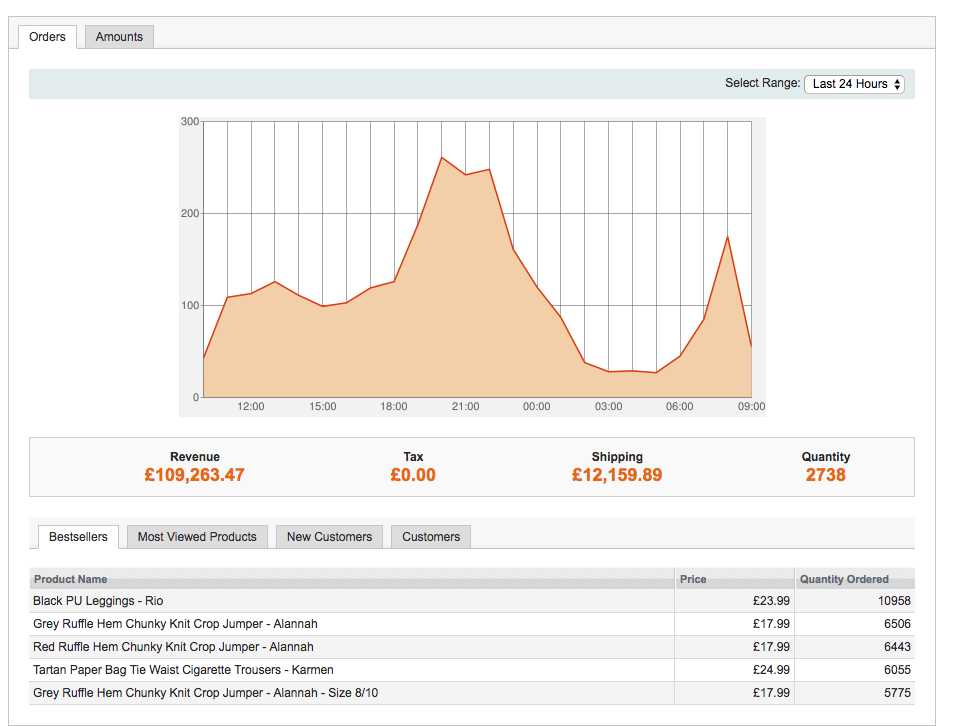

As we all know traffic likes to and should spike in the 4th quarter

To scale easy keep it simple. Great choice when it comes to Magento hosting would be as always AWS. High availability, fast page load, security is something that you can expect on AWS as the main player when it comes to Magento cloud hosting. Especially for high traffic online stores where auto-scaling feature takes over.

On the other side another great cloud platform, more affordable and suitable for smaller businesses with average traffic would be Linode . Deployment complexity is reduced big time compared to AWS. For example you have to attach your instances manually (no auto-scaling triggers available for Linodes as of yet) but this goes fast and compared with AWS it’s less expensive. Anyway we will keep the list of available platforms to these two since we use both of them successfully for years now.

AWS works better for some stores when Linode will work better for other online stores.

There is nothing like ‘Best hosting for Magento’. What works for your business and what doesn’t work is subject of research and a your marketing strategy. Some Magento stores need less power and less complexity when other stores require different layers of complexity and lot of raw hardware power. Choose smart or talk to an expert if you expect to see faster page loads and checkouts within your online store. Today nobody really loves slow loading pages. Your visitors wont wait your pages to load. The will simple leave.

Our Client’s store

For us here at Upment it’s always cloud based hosting. BUT if you’r stuck with a local hosting company and if you can trust them, go ahead. This is more expensive for high traffic ecommerce stores. But if you are limited with time and if you have short deadlines, go for it. Make sure to go for a better hosting strategy as soon as traffic slows down.

Our client in subject has grown their business and was contracted with a local hosting company (Manchester based) for years, so the third option was the way for us to go. 95% UK traffic so local UK hosting provider.

It’s always good to deploy Magento environments for high traffic stores based on predictions, research and traffic statistics from the same period of last year. For that you should certainly talk to your marketing team and discuss traffic expectations.

Stack deployment based on predictions and current traffic

“Very roughly speaking for November we would expect there to be circa 1,750,000 users, which equates to approximately 2725000 sessions.

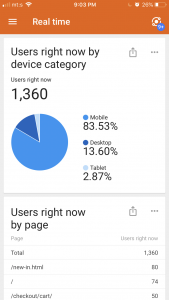

On Black Friday 2017 GA reports we had 3000 users in our peak hour (10% of the days total users (30,000)) – it was spread more evenly throughout the day than we would typically see.

That said, our highest percentage hour is usually 10-12%.

Based on our financial targets for the day we’re looking to do 3 times what we did last year.

That would be 90,000 users that day users typically spend double that day so we need half the amount of traffic to hit targets compared to a typical day (for our target we would need 180000 on a typical day).

If we plan for 120,000 users I think that gives us breathing space.

That would mean 12,000 in the peak hour hour. They spend more time on the site too though which is around 5.5 minutes. Let’s assume 6.

Crudely that is 2000 concurrents we need to plan for. If we plan for capacity of 2500 I think we’re in a safer position.

December is far more spread out than that. If we plan for that, it will cover us well into August/October the following year, when we’d expect to see that sort of traffic more usually.”

Quite impress-full marketing efforts at the first place ! Driving this amount of of traffic to an e-commerce business looks impossible to many agencies.

For us it was a BIG challenge to set-up a proper envirnoment that will handle this amount of traffic. We aren’t talking about visitors that would hit the store every couple of minutes or hours. We are talking about 300-1500 concurrent users that will browse, search, checkout and add products to carts at the very same moment.

Black Friday was approaching and we had 2 weeks to set everything ready.

The good thing about this project was that our client was already contracted with a local hosting company. No migration strategy had to keep our mind’s busy though the first thing was to bring the store (content) closer to the visitors. If your traffic is USA based don’t look after UK hosting companies. We are in the age of super fast Internet but network latency is something that we don’t need especially during the busiest time in the year – traffic wise.

Since 95% traffic was UK based integration of a CDN wouldn’t bring much in terms of page loads. CDN is important segment if you attend to pass google insights and tools like GTmetrix. But at this moment this wasn’t something that would benefit our clients store initially. CDN strategy we can leave for later.

As mentioned above our favorite players when it comes to Magento hosting are Linode and AWS. But this wasn’t a concern this time. We had a team of local engineers (UKfast) as a part of the project and it was a matter of deploying a powerful infrastructure with multiple servers for the necessary stack where the latest 1.9.4 Magento version should run smoothly.

Solution and deployment

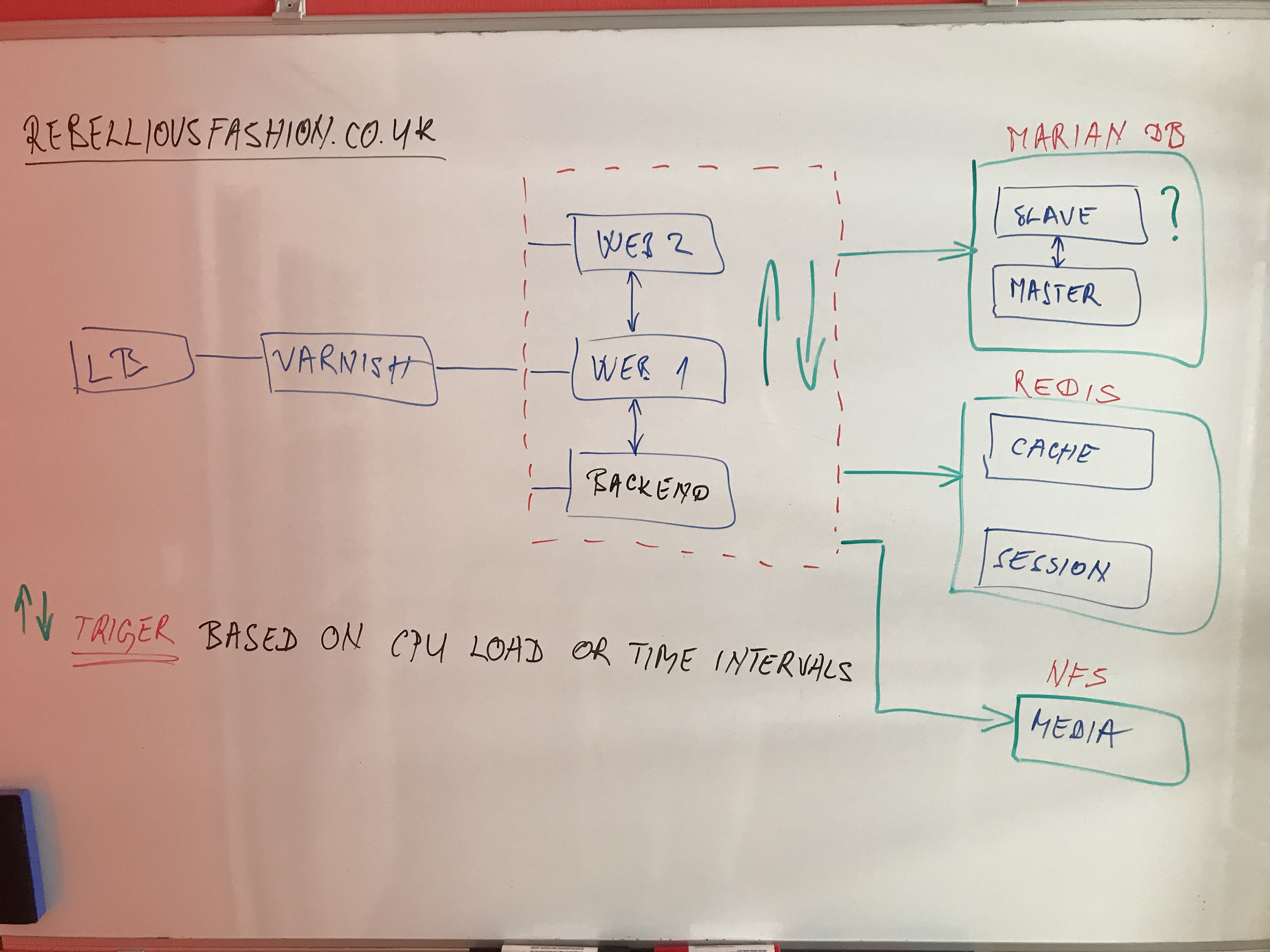

After few conference calls and discussions with the engineers form hosting company we had a plan. We used our whiteboard to draw up an environment that should handle more then 1.5k concurrent users without problems. The drawing represents a block diagram of logical entities that should handle all the traffic. A simplified picture of the logic:

- The client can easy understand the benefits of the new environment

- The engineers can follow up and deploy proper hardware

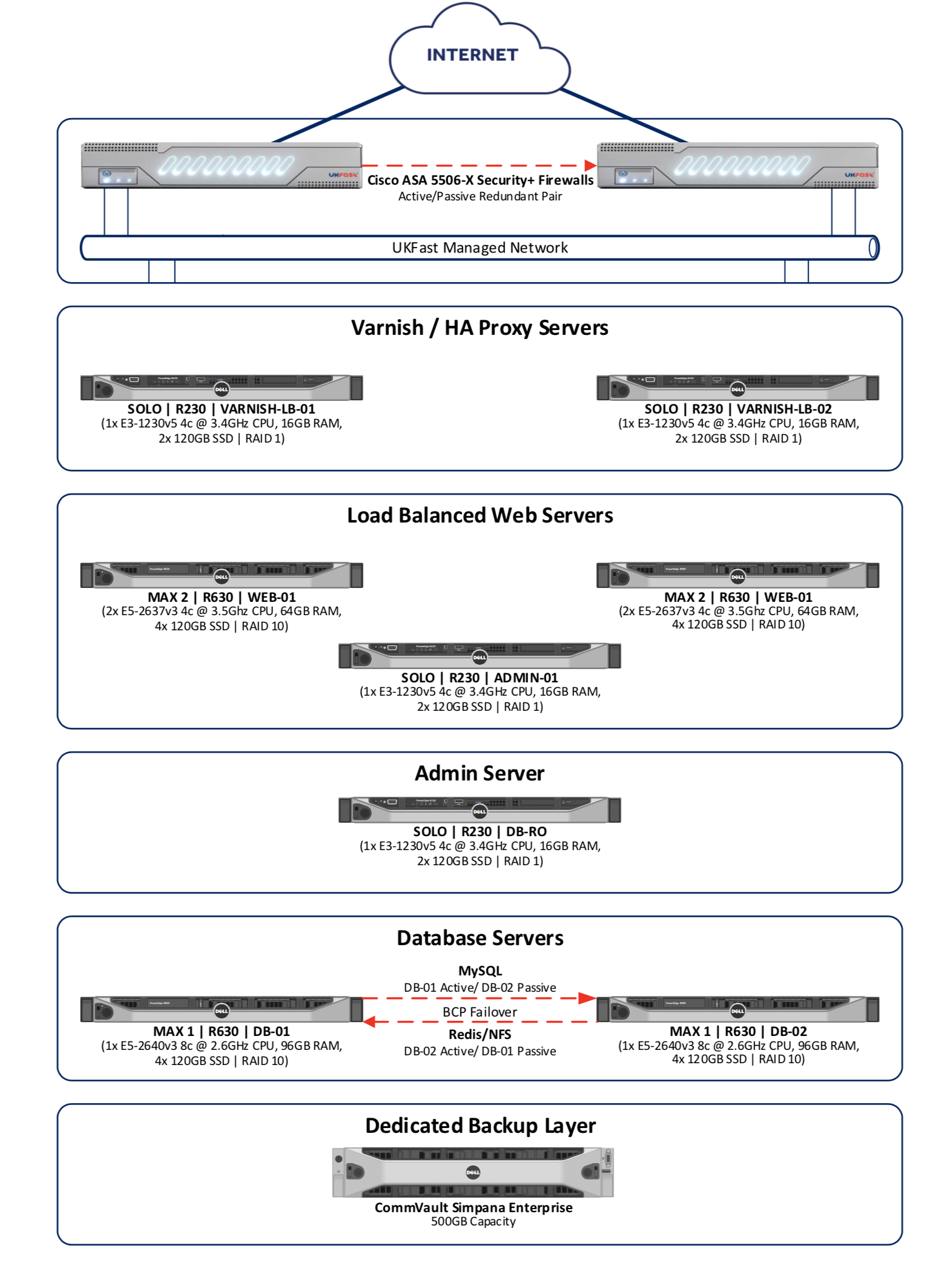

The hosting company came up with this hardware:

– Load balancer & Varnish cluster (1x E3-1230v5 4c @ 3.4GHz CPU, 16GB RAM, 2x 120GB SSD | RAID 1) This is a high availability cluster using DRBD CentOS clustering which replicates the data across both nodes at block level. This is to provide high availability/redundancy. It will be the same as if you were deploying to a single server.

– 2x Web (nginx) servers (2x E5-2637v3 4c @ 3.5Ghz CPU, 64GB RAM, 4x 120GB SSD | RAID 10)

– 1x Magento admin server (MAX I) which can also been added into load as a web server if needed (for short notice/emergency use). (1x E3-1230v5 4c @ 3.4GHz CPU, 16GB RAM, 2x 120GB SSD | RAID 1) This is the Magento Admin server which can also be used for cron’s and general operations.

– MySQL BCP cluster for the database, NFS and Redis. (1x E5-2640v3 8c @ 2.6GHz CPU, 96GB RAM, 4x 120GB SSD | RAID 10). From a CPU perspective, 8 physical cores (16 threads) should be plenty.

– Read only MySQL server for CommVault backups to be taken without affecting the live site.

– CommVault backup server. (CommVault Simpana Enterprise 500GB Capacity)

– Dedicated Cisco ASA 5506-X high availability firewalls.

That was a high end hardware setup that we got.

The only and most important downside of this setup is the fact that we couldn’t use an autoscaling feature. To scale up we would need to submit a request to the hosting company and they would need to add additional nodes (physical servers) to the setup. This could take days. We had to add more power into new envirnoment when it wasn’t necessary for the current amount of traffic. But we had to make sure that no downtime happens during the busiest sales weeks in the year. You wouldn’t have this kind of problems in a cloud based envirnoment.

If you wonder how much this kind of Magento hosting would cost, click here to see monthly fees for it. I would think that we could deploy the same setup on AWS for much less, but at this point we didn’t have time for AWS strategy. Current traffic is between 300-500 concurrent users with 1000-1500 sales closed daily, that will go to x3 in the incoming week. We will be migrating to AWS probably at one point after Holiday sales.

Full optimized stack for Magento1.9.x

Hardware was setup in a week and we had another week to migrate all data and fine tune haproxy, nginx, mysql, opcache, php7.2 and Varnish settings for the expected traffic. Varnish VCL wasn’t ready yet. Since we were a bit short with time we decided to go live without Varnish initially. Setting up Varnish for Magento 1.9.x can be often tricky, even with turpentine module and ESI support.

We used or full optimized Upment stack for Magento with few important updates that should improve our LEMP stack so it doesn’t get stuck when 2500 users land on a product or category page at once. All config files for services that should handle this amount of traffic are available via our git repo here.

Migration from current server to new stack (same datacenter) was scheduled for 5am. The early morning was the only period of the day when traffic was dropping to 30-40 concurrent users (this would still be a decent amount of traffic for other stores). The final switch from old server to new stack was done at name server level (DNS). That way we didn’t miss any sale during the switch – zero downtime. In few minutes everything was set and traffic was landing on the new stack. Perfect!

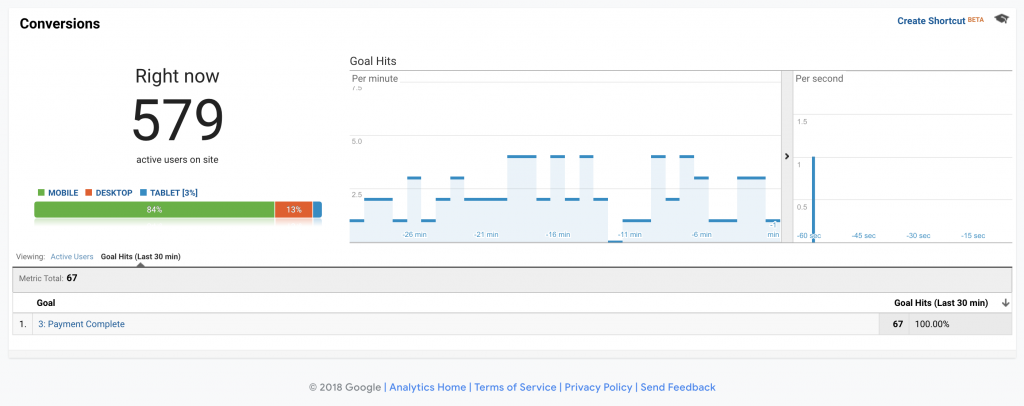

Since we deployed new envirnoment based on prediction it was time for the last step – the stress test. After we made sure that sales are closing successfully on the new stack we scheduled a stress test for the next morning. Same time 5am. The only time when traffic drops close to zero. Duration of the test was around 9 minutes and here are the expected results.