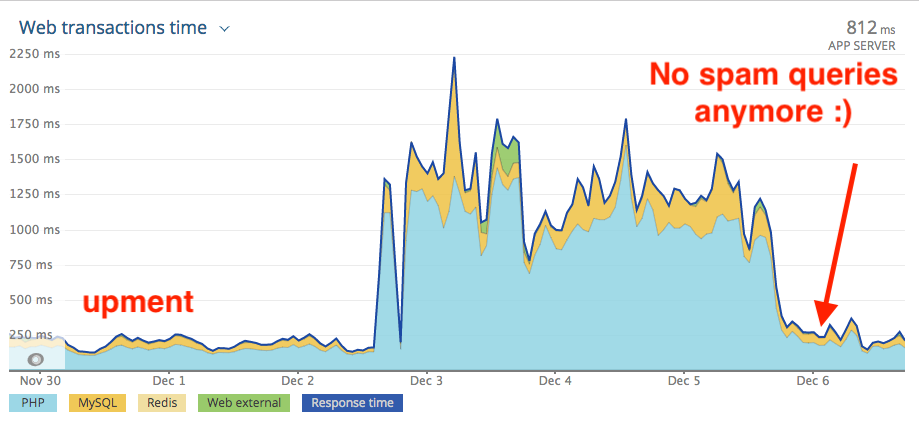

A spam attack started in the middle of Q4, just two weeks before Christmas sales. The attack was detected through our New Relic account. Soon everybody was aware that we had a heavy server load with no more than the usual number of visitors to the store.

It was time for panic. 🙂

This Magento based store was sitting on a multi server environment. Load balancer, two web servers, MySQL replication and Redis as cache and session storage.

Even with a firewall in front of our Magento store those spammy search queries were still landing on our backend. The picture above shows what was happening to both of our web servers during the spam attack.

The strategy

The first idea was to blacklist all IP addresses that were sending spam requests. But the problem was that the pool of infected IP addresses was huge. After several requests, the IP address changed – around every 10 seconds. So how do we filter all those IP addresses??

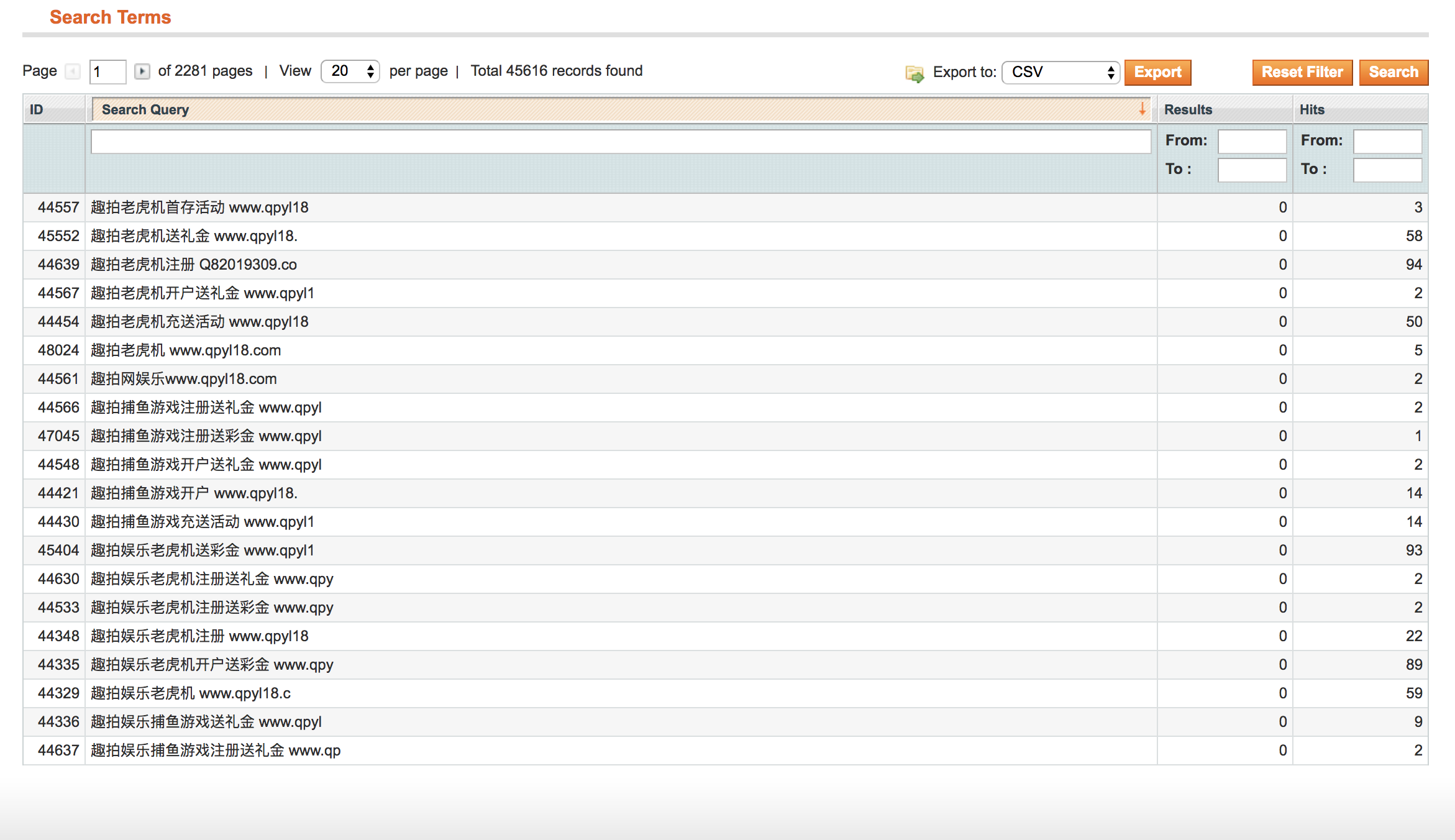

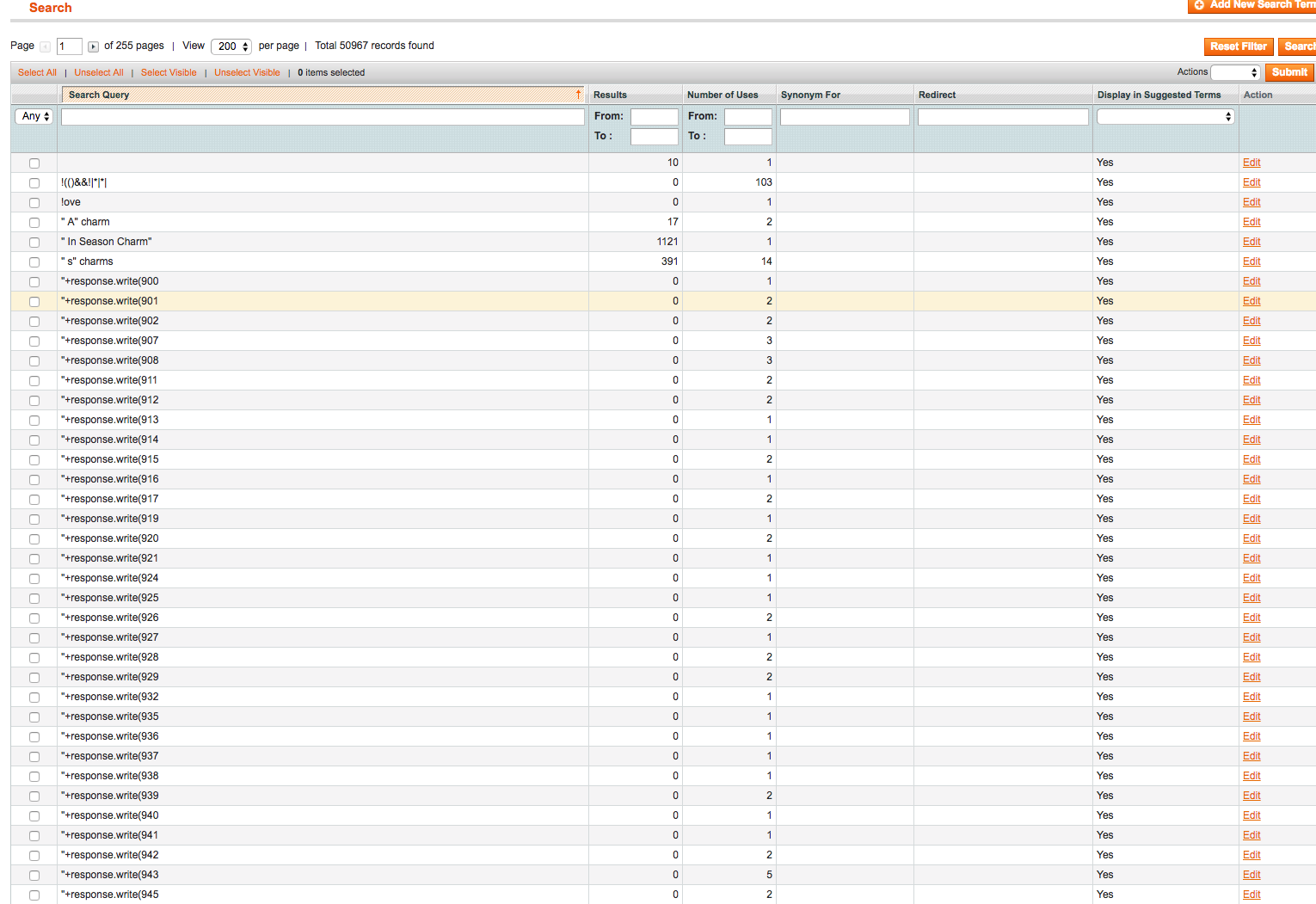

The second idea that we had was to respond with “403: Forbidden” for all spam queries that we found in Magento backend (Catalog -> Search Terms). We had a bunch of them, this is just a small sample:

大奖MG老虎机开户 Q82019309.com

Since we use Haproxy for SSL termination and load balancing, it was there were we started to look for a possible solution – our landing node with a public IP address assigned.

Haproxy has a great built-in function that compares query requests with a defined string:

urlp_sub()

You call this function to create Access Control Lists (ACL) in the haproxy.cfg file. The -i parameter was added for case insensitive support.

Our first few rules which were supposed to block the search query spam stream looked as bellow:

acl spamreq urlp_sub(q) -i passwd win.ini web.xml system32 ../.. acl spamreq urlp_sub(type) -i passwd win.ini web.xml system32 ../.. acl spamreq urlp_sub(price) -i passwd win.ini web.xml system32 ../.. http-request deny if spamreq

But that wasn’t enough. The spam bot was smart! It modified the spam strings soon after we added those rules to Haproxy and started using new query requests, for example:

GET /catalogsearch/result/index?cat=292&mode=list&price=100-200&q=././././some_inexistent

Servers were still under heavy load!!

We had to extend the query list in our haproxy.cfg file with new search queries that we found. We also added a 3-5 second delay before responding with a 403, to try to slow down the bot:

acl spamreq urlp_sub(q) -i passwd win.ini web.xml system32 ../.. response.write proc/version nslookup some_inexistent set%7cset -1%20OR%20 %24{ acunetix \x22 !(()%26%261 1*1*1 vulnweb ***** waitfor%20delay pg_sleep )))) <!-- %40%40UpLap

acl spamreq urlp_sub(type) -i passwd win.ini web.xml system32 ../.. response.write proc/version nslookup some_inexistent set%7cset -1%20OR%20 %24{ acunetix \x22 !(()%26%26 1*1*1 vulnweb ***** waitfor%20delay pg_sleep )))) <!-- %40%40UpLap

acl spamreq urlp_sub(price) -i passwd win.ini web.xml system32 ../.. response.write proc/version nslookup some_inexistent set%7cset -1%20OR%20 %24{ acunetix \x22 !(()%26%26 1*1*1 vulnweb ***** waitfor%20delay pg_sleep )))) <!-- %40%40UpLap

acl spamreq urlp_sub(dir) -i passwd win.ini web.xml system32 ../.. response.write proc/version nslookup some_inexistent set%7cset -1%20OR%20 %24{ acunetix \x22 !(()%26%26 1*1*1 vulnweb ***** waitfor%20delay pg_sleep )))) <!-- %40%40UpLap

acl spamreq urlp_sub(order) -i passwd win.ini web.xml system32 ../.. response.write proc/version nslookup some_inexistent set%7cset -1%20OR%20 %24{ acunetix \x22 !(()%26%26 1*1*1 vulnweb ***** waitfor%20delay pg_sleep )))) <!-- %40%40UpLap

acl spamreq urlp_sub(p) -i passwd win.ini web.xml system32 ../.. response.write proc/version nslookup some_inexistent set%7cset -1%20OR%20 %24{ acunetix \x22 !(()%26%26 1*1*1 vulnweb ***** waitfor%20delay pg_sleep )))) <!-- %40%40UpLap

http-request lua.delay_request if spamreq

http-request deny if spamreq

Much better! As a result we had a bunch of 403 forbidden requests in our Haproxy log file from different IP addresses. That was awesome!! But the spam bot was smart again and it started to use random generated URLs with the spam string included now.

You can see the difference here:

The first line on the image above is an example of a Magento spam search query request, while the second line is an example of a Magento URL spam request with the spam/exploit string included. The URL requests were still passing through our Haproxy spam filter since we’re only looking at GET query string, not the URL itself.

We took another look at Haproxy log file

Even during an interval of URL spam requests the spam bot sometimes attempted to submit search query requests, BUT these got rejected by our Haproxy filter (403). It seems that the spam bot was actually not that smart. 🙂 As soon as a search query request gets detected we had the offensive IP address. And that was the key!

If we blacklist all IP addresses that receive the 403 response we could block this spam attack!

We created a quick & dirty shell script to retrieve all IP addresses with a 403 response from the Haproxy log file and and we blacklisted these IP addresses with APF (Advanced Policy Firewall):

#!/bin/bash

HAPLOG=$(/usr/bin/tail /var/log/haproxy.log -n 200 | grep "http-in/<NOSRV>" | /usr/bin/cut -d':' -f4 | /usr/bin/sort | /usr/bin/uniq)

for IPADD in $HAPLOG

do

/usr/sbin/apf -d $IPADD Spam/attack

done

It’s available for download on our github as well: https://github.com/Upment/haproxy-apf-blocker

We set it up as a cron job, to run every minute, and now all we had to do is wait for a few hours as the shell script blacklists a decent number of IP addresses, spam sources.

In the morning a short look into our New Relic account made us all happy again:

Couple of quality work hours saved this Magento store from a lot of bandwidth use and the ability for the shoppers to browse and checkout quick. The CR (conversion rate) is back to normal and the client is happy. Everybody is happy again since we blocked a heavy spam attack in the middle of the busiest sales month in the year.

Well, except the now locked-out spammer. 😉